Hi, everyone,

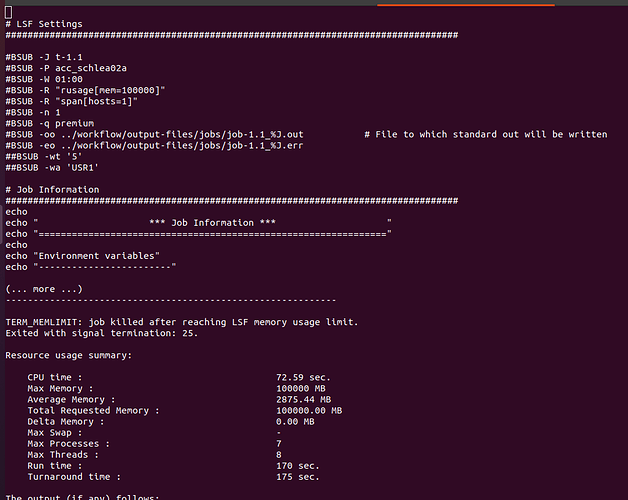

Curious if anyone else is having a similar issue to mine. I’m using an LSF batch submission system and running VirtualFlow1.0 using a group of large tranches (lead-like molecules, as defined by ZINC20; ZINC), which ends up being about 566 million compounds. I’m able to successfully start the workflow with the vf_start_jobline script, and my jobs will start submitting to the batch system. I’m submitting a total of 1000 jobs, 1 every 30 seconds. However, my jobs don’t last long and quickly die, due to hitting the upper threshold of memory I’ve requested per core. I’ve tried increasing memory up to 100 GB with 1 core (see image), but that doesn’t seem realistic.

Am I doing this right, or am I correct in thinking that some of the larger tranches really require that much memory?

Would appreciate anyone’s advice or wisdom on this.

Thanks!